| About Me |

| Publications |

| Projects |

| Contact Me |

Users are able to calibrate to various changes to both their own abilities and their surrounding environments. Most calibration studies have examined recalibration to stable perturbations (i.e., a single, constant change). However, numerous real-world experiences involve perturbations that do not remain constant. To understand how unstable perturbations affect calibration in terms of postural sway and prospective control, a VR simulation was developed that allowed altering of optic flow in an unstable fashion.

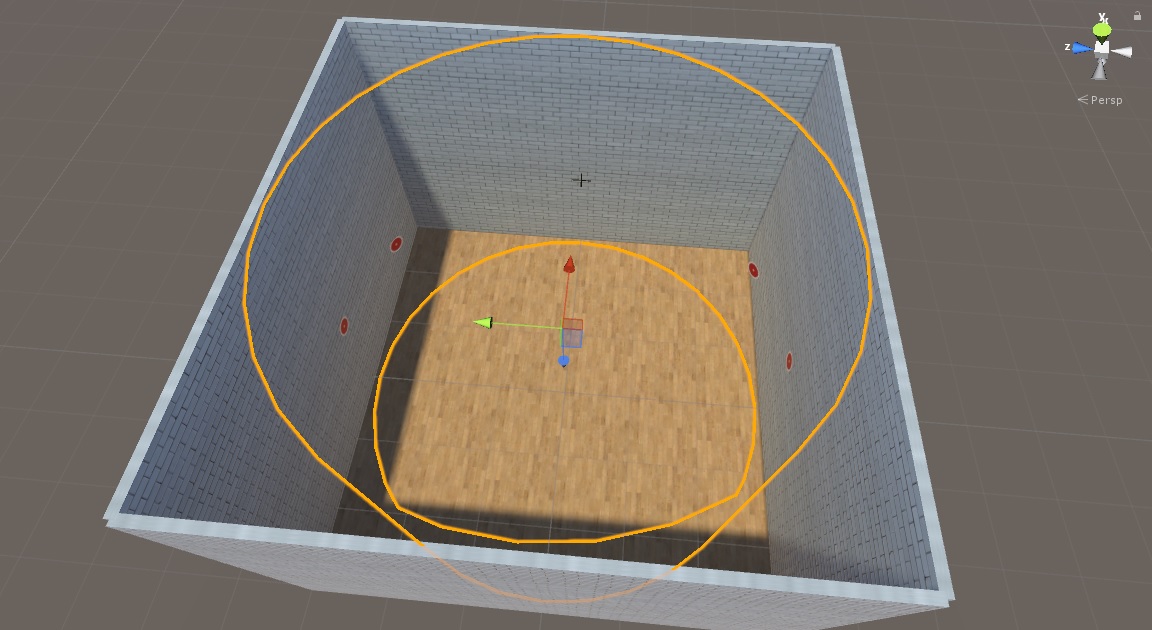

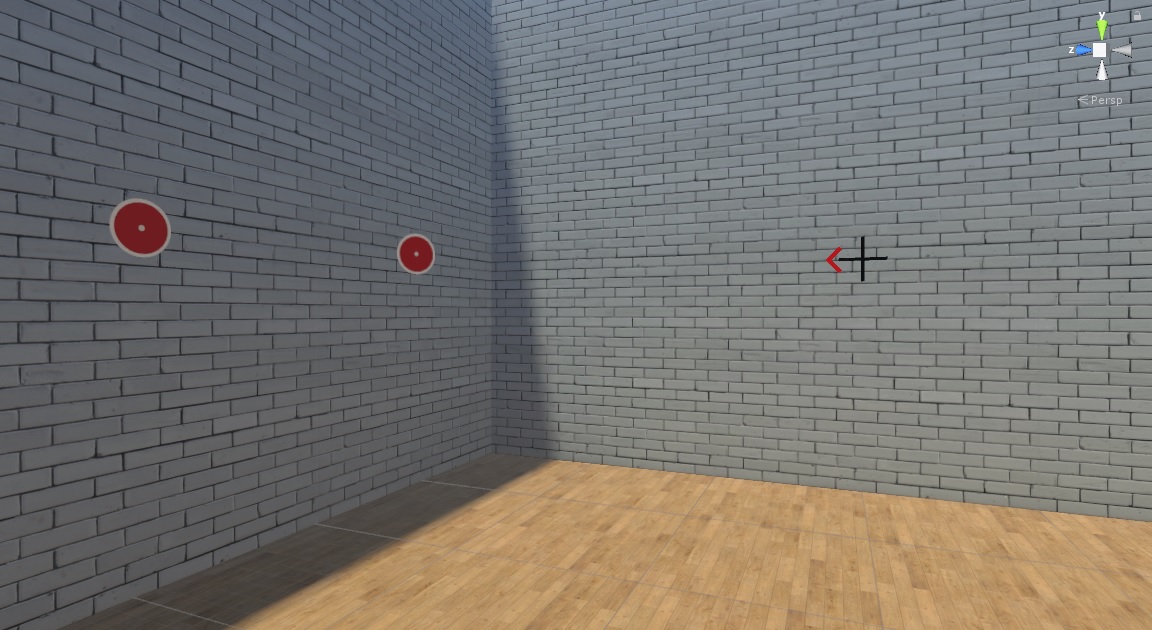

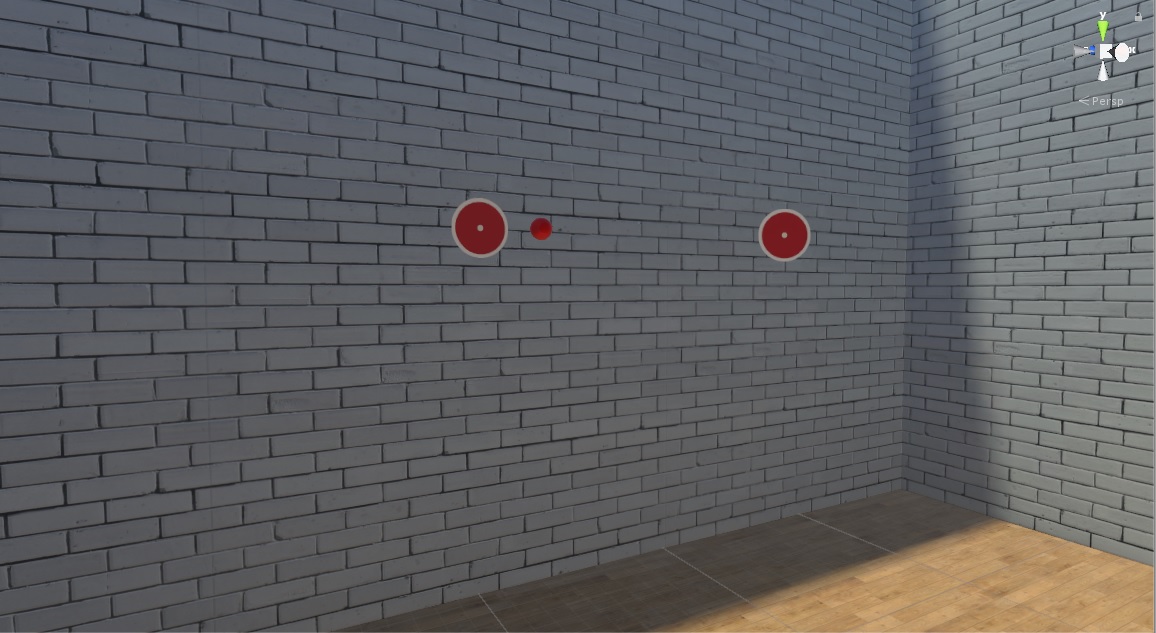

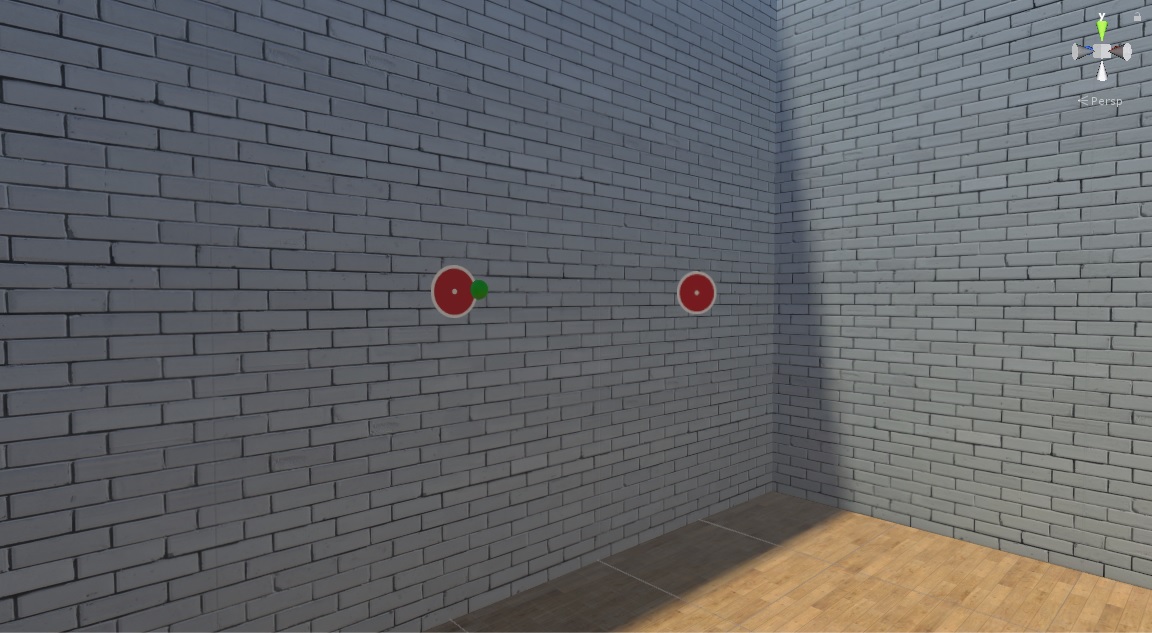

The simulation features a virtual room with wooden floors and four brick patterned walls and was created using Unity. There were four bullseye targets in the scene that the user was asked to shoot at in the presence of perceptual perturbations induced by adding visual gain to the head rotation of the user. The rotational gain added was either constant or varied depending on the condition the user was assigned to.

The user used an HTC Vive controller to shoot at target while experiencing this visual rotational gain. The simulation provided visual and auditory feedback based on the participant’s performance during the simulation. Screenshots of the simulation are presented below.

For more details on the simulation and a detailed discussion of the outcomes, please see the dissertation work by Leah Hartman.